It’s hard to predict in advance whether an online system will crash sharply. This is true for the pre-selection online interface as well as for government sites such as the vaccine registration portal. We asked experts why everything doesn’t always work the way it should.

When they really attack

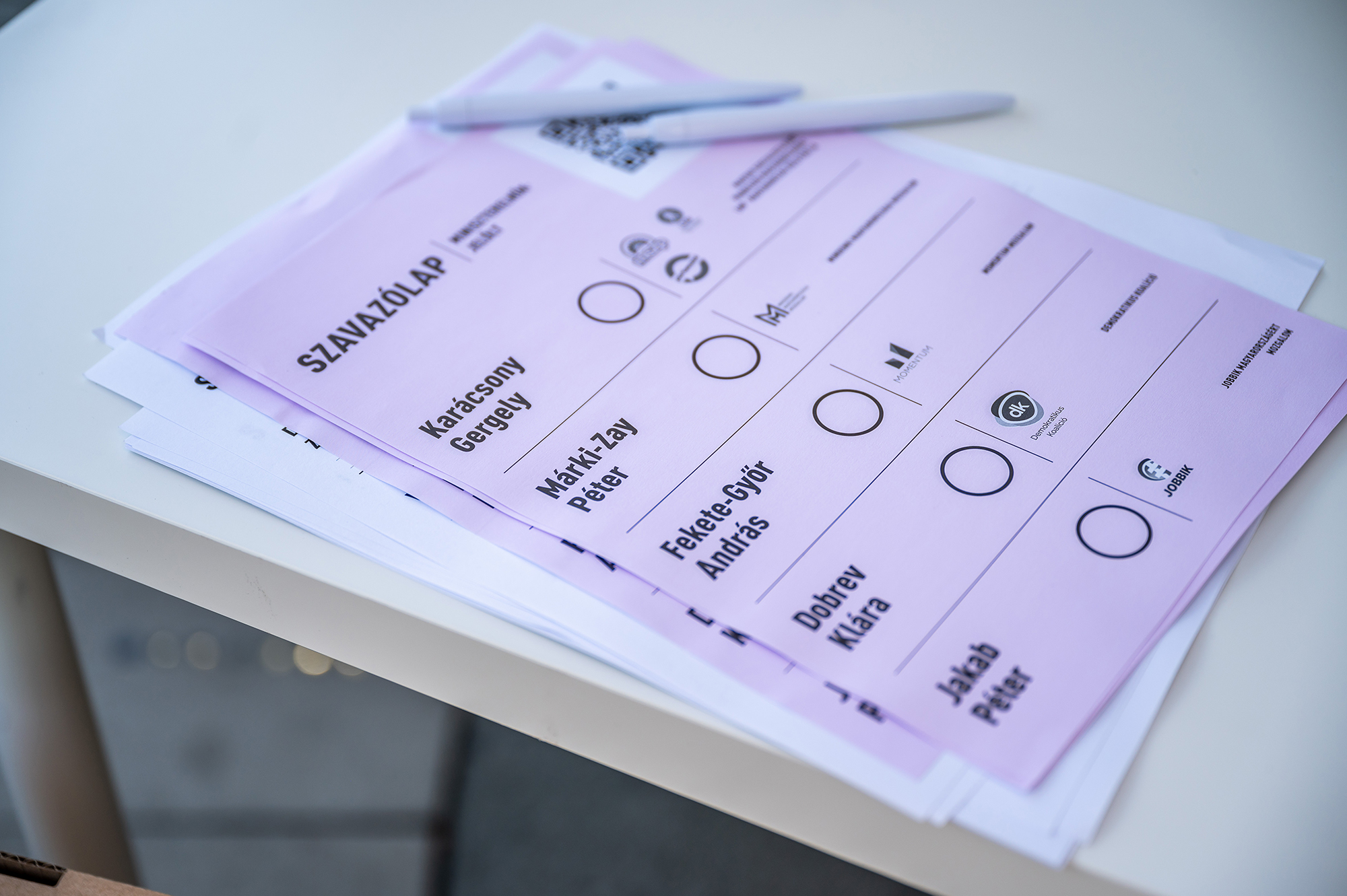

The comparison to vaccine registration is pretty bad because it’s a wedge-free page out of hundreds of millions, and it’s a complete election system with volunteers. There, the downtime could really be caused by the smooth load, the pre-selection online interface and foreign IPs being attacked from a path outside of Cloudflare. A real voter could only come through Cloudflare

– András Danka Miklós , Momentum’s technology director, told our newspaper . In the case of pre-selection, undersizing, scarcity of adequate resources and, as it turned out, a deliberate attack made it difficult to operate smoothly.

When only many they are coming

In general, each service and website has an average traffic for which operators scale the infrastructure. And more busy-than-average, predictable events need to be planned, say a Black Friday. In such cases, one page is shut down because the operators underestimate the moment of the attack. Of course, preliminary surveys can be made, but it could easily happen that the estimated thousand users will be ten thousand – explains to our paper Gábor Szöllősi IT infrastructure expert. In addition to the periodic increase in traffic, if necessary, it is also necessary to expand the network bandwidth or physical servers. However, this investment is not necessarily worth it because 333 days out of 365 days of the year do not require as many resources. The best case is if you manage to reallocate resources within a company, even for a week, a few days, but this is not always possible. The other way is to either rent the resources (servers) or run the service in the cloud. The latter is simple because if the website or webshop is well-scaled, it can be run on a hundred servers instead of ten, say a Black Friday. In the cloud, you only have to pay the cost for those few days, which is negligible compared to having to do the same from physical procurement. According to Szöllősi, this is one of the reasons why a cloud technology is good and flexible, because it is possible to react quickly to additional loads. “Downtime usually means a loss of revenue because people can’t buy – and that’s often bigger than if the operators were prepared in advance. You need to be prepared for the load at every level, from the infrastructure to the application. To have enough network resources, bandwidth, enough processor and memory, and the applications you use, the programming side should be in order. Whatever runs out or makes mistakes on any layer, the whole thing can be overturned, ”says the expert. Every system has a breakpoint, meaning it reaches the load when it can no longer serve anything, only requests come in. In the meantime, monitoring is always a key issue: it is important that operators are constantly aware of resources and set alarms in the monitoring system.

Intervention is a good question: a lot of bad things can be done. When a page starts to slow down, many people tend to restart applications and servers. The trouble is that after that, the system wants to serve many requests at once, which makes the situation even worse. It is always possible to intervene in the event of a slowdown with the knowledge of the system, we need to know which component is worth poking because we cause less damage. Obviously it is best to have ready-made plans for such a case

– explains Szöllősi.

The keyword: testing

According to the expert, this is where we come to the importance of testing, which should be outsourced. For example, a test can run a traffic of ten thousand users and see how a website would behave under such a load. However, many people fail to take this step: Szöllősi says that the vaccine registration page, for example, has not been tested thoroughly enough. In the case of load testing, only machine testing can be considered to simulate thousands of users, yet care must be taken that the process is not completely synthetic, as it does not reflect the real behavior of users. Once people open a homepage, they start browsing, searching, the real load in life works differently. According to Szöllősi, even in the case of a webshop, the energy should be put into the comprehensive testing, the general customer behavior should be averaged.

The test they put together may not reflect real traffic. So it may be that an application has been tested all the way through, but when the real traffic comes in, it hasn’t been tested on such a working pattern. This causes a difference in resources and creates completely different load curves in the system. There will be overload in live operation in places that did not come out during testing. It is no coincidence that testing is also a separate sector in informatics, because we need extraordinary expertise to improve the methods in order to approach reality.

Whether a site has been paralyzed by real traffic or a deliberate congestion attack is difficult for even experts to determine right away – especially on an international site that can be visited from all over the world. But on an interface that concerns, say, Hungarian public affairs (pre-selection, vaccine registration), excessive US, Chinese, Russian traffic may be unreasonable and suspicious. Depending on the source of the traffic, you can now use different network filters, even broken down by country, and you can also prevent a page from being accessed outside the country. It can be suspicious if most of the traffic is bombarded with a robotic request, which can also be filtered by a firewall or some network protection tool, explains Szöllősi. There are also providers that specifically provide protection against congestion attack, the best known being Cloudflare (this was also used for the pre-selection online system). The essence of the service is that requests to the domain of a website (say some kind of webshop) first go to Cloudflare, land on their servers so they can do all sorts of checks on them. Among other things, the browser from which the request comes from, where it comes from, whether it is robot or real traffic. If the latter, the request will only be forwarded, thus protecting the robots as a kind of firewall, which is more likely to prevent overload attacks.

Marjai János / 24.hu Another form of congestion attacks, where attackers generate traffic in a volume that is already crowding network resources, say, consuming bandwidth. No it is necessary for the attacked server to run out of resources, sometimes there is only so much traffic coming through the botnets that the real one no longer gets beyond the attacker’s traffic. According to Szöllősi, this is similar to having a water pipe with all the water trickling through it, but the attacker goes there with a high-pressure system that he plugs into the pipe and wins from then on. It is really only possible to protect against hundreds of gigabits of traffic triggered by botnets with network devices, the client cannot do much with it at the server level, says the expert. He adds that the system needed to carry out an overload attack is ready to be rented almost as a service on the black market. It is enough for the customer to enter the address they want to attack on a simple clickable, user-friendly interface, they do not have to deal with any technical things if they pay for it. In some cases, it is very difficult to reconstruct afterwards who was actually behind the servers carrying out the attack, mainly because the anonymity is facilitated by the various offshore bank cards and accounts. If you comment , want to chat, argue or just share your opinion with others, the 24. you can do it on your facebook page phpbrain. If you read more about the reasons, here you will find answers . Note: This article have been indexed to our site. We do not claim ownership or copyright of any of the content above. To see the article at original source Click Here