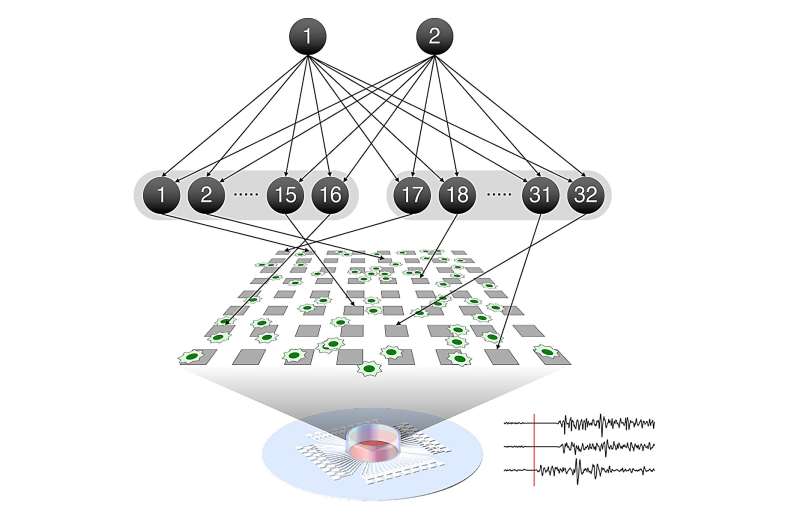

The experimental setup. Cultured neurons grew on top of electrodes. Patterns of electrical stimulation trained the neurons to reorganize so that they could distinguish two hidden sources. Waveforms at the bottom represent the spiking responses to a sensory stimulus (red line). Credit: RIKEN

An international collaboration between researchers at the RIKEN Center for Brain Science (CBS) in Japan, the University of Tokyo, and University College London has demonstrated that self-organization of neurons as they learn follows a mathematical theory called the free energy principle.

The principle accurately predicted how real neural networks spontaneously reorganize to distinguish incoming information, as well as how altering neural excitability can disrupt the process. The findings thus have implications for building animal-like artificial intelligences and for understanding cases of impaired learning. The study was published August 7 in Nature Communications.

When we learn to tell the difference between voices, faces, or smells, networks of neurons in our brains automatically organize themselves so that they can distinguish between the different sources of incoming information. This process involves changing the strength of connections between neurons, and is the basis of all learning in the brain.

Takuya Isomura from RIKEN CBS and his international colleagues recently predicted that this type of network self-organization follows the mathematical rules that define the free energy principle. In the new study, they put this hypothesis to the test in neurons taken from the brains of rat embryos and grown in a culture dish on top of a grid of tiny electrodes.

Once you can distinguish two sensations, like voices, you will find that some of your neurons respond to one of the voices, while other neurons respond to the other voice. This is the result of neural network reorganization, which we call learning. In their culture experiment, the researchers mimicked this process by using the grid of electrodes beneath the neural network to stimulate the neurons in a specific pattern that mixed two separate hidden sources.

After 100 training sessions, the neurons automatically became selective—some responding very strongly to source #1 and very weakly to source #2, and others responding in the reverse. Drugs that either raise or lower neuron excitability disrupted the learning process when added to the culture beforehand. This shows that the cultured neurons do just what neurons are thought to do in the working brain.

The free energy principle states that this type of self-organization will follow a pattern that always minimizes the free energy in the system. To determine whether this principle is the guiding force behind neural network learning, the team used the real neural data to reverse engineer a predictive model based on it. Then, they fed the data from the first 10 electrode training sessions into the model and used it to make predictions about the next 90 sessions.

At each step, the model accurately predicted the responses of neurons and the strength of connectivity between neurons. This means that simply knowing the initial state of the neurons is enough to determine how the network would change over time as learning occurred.

“Our results suggest that the free-energy principle is the self-organizing principle of biological neural networks,” says Isomura. “It predicted how learning occurred upon receiving particular sensory inputs and how it was disrupted by alterations in network excitability induced by drugs.”

“Although it will take some time, ultimately, our technique will allow modeling the circuit mechanisms of psychiatric disorders and the effects of drugs such as anxiolytics and psychedelics,” says Isomura. “Generic mechanisms for acquiring the predictive models can also be used to create next-generation artificial intelligences that learn as real neural networks do.”

More information:

Nature Communications (2023). DOI: 10.1038/s41467-023-40141-z

Citation:

Mathematical theory predicts self-organized learning in real neurons (2023, August 7)

retrieved 28 September 2023

from https://medicalxpress.com/news/2023-08-mathematical-theory-self-organized-real-neurons.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

Note: This article have been indexed to our site. We do not claim legitimacy, ownership or copyright of any of the content above. To see the article at original source Click Here