Alexa, Amazon’s ubiquitous voice assistant, is getting an upgrade. The company revealed some of these changes in a virtual event today. One of the most interesting developments is that users can now personalize their Alexa-enabled devices to listen for specific sound events in their homes.

Amazon also unveiled new features and products, including additional accessories for Ring and Halo devices, and access to invite-only devices such as the Always Home Cam and a cute home-roving, beat-boxing robot called Astro.

Alexa’s latest capabilities are part of the Amazon team’s work on ambient computing—a general term that refers to an underlying artificial intelligence system that surfaces when you need it and recedes into the background when you don’t. This is enabled through the connected network of Amazon devices and services that interact with each other and with users.

“Perhaps the most illustrative example of our ambient AI is Alexa,” Rohit Prasad, senior vice president and head scientist for Alexa at Amazon, tells Popular Science. “Because, it’s not just a spoken language service that you issue a bunch of requests. As an ambient intelligence that is available on many different devices around you, it understands the state of your environment and even acts perhaps on your behalf.”

Alexa already has the ability to detect what Prasad calls “global” ambient sounds or sound events. These are things like glass breaking, or a fire alarm, smoke alarm going off. Those are events that make your home more safe while you’re away, he says. If anything goes wrong, Alexa can send you a notification. It can also detect more innocuous sounds like your dog barking or your partner snoring.

Now, Prasad and his team are taking this pre-trained model for global sound events that they built using thousands of real world sound samples, and are offering a way for users to create alerts for their own custom sound events by manually adding 5-10 examples for a specific sound that they would like Alexa to keep an ear out for at home. “All the other kinds of data that you’ve collected via us can be used to make the custom sound events happen with fewer samples,” he says.

This could be something like the refrigerator door being left open by kids after school for more than 10 minutes. “The two refrigerators in my home, they both make different sounds when the door is left open by one of our kids,” says Prasad. That way, even if he’s not at home when his kids are, Alexa could send him a notification if someone didn’t shut the refrigerator door properly.

You could set an alert for a whistling kettle, a washer running, or a doorbell ringing, an oven timer going off while you’re upstairs. “And if you have an elder person in the home who can’t hear well and is watching television, if it’s hooked to a Fire TV, then you can send a message on the TV that someone’s at the door, and that the doorbell rang,” says Prasad.

Ring can tell you the specific items it sees out of place

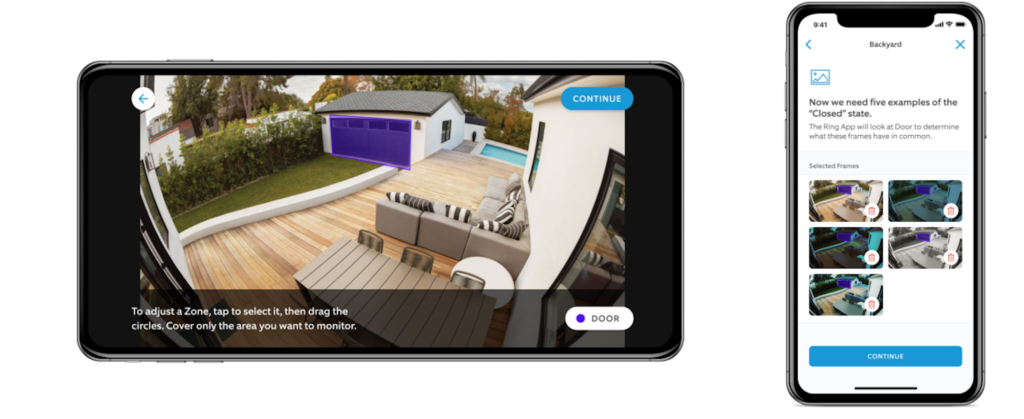

In addition to custom sound events, Alexa could also alert you about certain visual events through its Ring cameras and some Ring devices. “What we found is that Ring cameras, especially for outdoor settings, [are] great for looking at the binary states of objects of interest in your homes,” Prasad says. For example, if you have a Ring camera facing an outdoor shed, you can teach it to check if the door has been left open or not by providing it some pictures for the open state, and for the closed state, and have it send you an alert if it’s open.

[Related: Amazon’s home security drone may actually be less creepy than a regular camera]

“You’re now combining computer vision and a few short learning techniques,” says Prasad. The team has collected a large sample of publicly available photos of garage and shed doors to help with the pre-training, just like with the audio component of the ambient AI. “But my shed door can look different from the shed you may have, and then the customization is still required, but now it can happen with very few samples.”

Alexa will soon be able to learn your preferences

Last year, Amazon updated Alexa so that if it doesn’t recognize the concept in a customer’s request, it will come back to you and ask “what do you mean by that?”

This could be a request like: set my thermostat to vacation mode, while vacation mode is a setting that’s not known. Plus, your preference for the setting could be 70 degrees, instead of 60 degrees. That’s where users could come in and customize Alexa through natural language.

“Typically when you have these alien concepts, or unknown and ambiguous concepts, it will require some input either through human labelers [on the developer end] saying “vacation mode” is a type of setting for a smart appliance like a thermostat,” Prasad explains.

This type of data is hard to gather without real-world experience, and new terms and phrases pop up all this time. The more practical solution was for the team to build out Alexa’s ability for generalized learning or generalized AI. Instead of relying on supervised learning from human labelers at Amazon, Alexa can learn directly from end users, making it easier for them to adapt Alexa to their lives.

In a few months, users will be able to use this capability to ask Alexa to learn their preferences, which is initiated by saying, “Alexa, learn my preferences.” They can then go through a dialogue with Alexa to learn about three areas of preferences to start with: those are food preferences, sports teams, and weather providers, like the Big Sky app.

If you say, “Alexa, I’m a vegetarian,” when Alexa takes you through the dialogue, then, the next time you look for restaurants to eat nearby, it will remember and prioritize restaurants that have vegetarian options. And if you just ask for recipes for dinner, it would prioritize vegetarian options over others.

For sports teams, if you’ve said that you like the Boston Red Sox for baseball, and the New England Patriots, and then you ask Alexa for sports highlights on Echo show, you’ll get more custom highlights for your preferred teams. And if another family member likes other teams, you can add that to the preference as well.

[Related: The ‘artificial intelligence’ in your new smart gadget may not be what you think]

“We already know customers express these preferences in their regular interactions many times every day,” says Prasad. “Now we are making it very simple for these preferences to work.” You can go through the preset prompts with Alexa to teach it your preferences, or teach it in the moment. For example, if you ask Alexa for restaurants and it recommends steakhouses, you can say, “Alexa, I’m a vegetarian,” and it will automatically learn that for future encounters.

“These three inventions that are making the complex simple are also illustrative of more generalized learning capabilities, with more self-supervised learning, transfer learning, and few short learning, and also deep learning, to make these kinds of interactive dialogues happen,” Prasad says. “This is the hallmark for generalized intelligence,” which is similar to how humans learn.

Alexa learns and grows

These three new features—the custom sounds, custom visuals, and preferences— are not only coming together to improve AI, but also improve Alexa’s self-learning, self-service, and self-awareness of your ambient environment. “Alexa is just more aware of your surroundings to help you when you need it,” Prasad says. Along with a feature like Routines, or Blueprints, these new add-ons allow Alexa to give more custom responses, without requiring any coding proficiency.

Alexa automatically learns how to improve itself as you use it more. In fact, Prasad says that with more than 20 percent of defects that Alexa has, it’s now able to automatically correct through no human supervision. “If it did something wrong and you barge in and say no, Alexa, I meant that,” it will remember that for the next time you ask for something similar, he says.

In his case, sometimes when he asks Alexa to play BBC, it registers what he says as BPC. “For an AI, simple is hard. Occasionally it recognizes ‘play BPC.’ But it recognizes usage patterns,” Prasad says. That way, it can fix the request automatically without asking every time, “did you mean BBC?”

[Related: If you’re worried about Amazon Sidewalk, here’s how to opt out]

“This is the type of automatic learning based on context in both your personalized usage and cohort usage that Alexa is able to be much smarter worldwide and try to estimate defects and correct automatically without any human input,” says Prasad. “If you look at the old days of supervised learning, even with active learning, Alexa will say ‘this is the portion I am having trouble with, let’s get some human input.’ Now that human input comes directly from the end user.”

Note: This article have been indexed to our site. We do not claim ownership or copyright of any of the content above. To see the article at original source Click Here