1 Machines Can Learn!

A few years ago, I decided I needed to learn how to code simple machine learning algorithms. I had been writing about machine learning as a journalist, and I wanted to understand the nuts and bolts. (My background as a software engineer came in handy.) One of my first projects was to build a rudimentary neural network to try to do what astronomer and mathematician Johannes Kepler did in the early 1600s: analyze data collected by Danish astronomer Tycho Brahe about the positions of Mars to come up with the laws of planetary motion.

I quickly discovered that an artificial neural network—a type of machine learning algorithm that uses networks of computational units called artificial neurons—would require far more data than was available to Kepler. To satisfy the algorithm’s hunger, I generated a decade worth of data about the daily positions of planets using a simple simulation of the solar system.

After many false starts and dead-ends, I coded a neural network that—given the simulated data—could predict future positions of planets. It was beautiful to observe. The network indeed learned the patterns in the data and could prognosticate about, say, where Mars might be in five years.

I was instantly hooked. Sure, Kepler did much, much more with much less—he came up with overarching laws that could be codified in the symbolic language of math. My neural network simply took in data about prior positions of planets and spit out data about their future positions. It was a black box, its inner workings undecipherable to my nascent skills. Still, it was a visceral experience to witness Kepler’s ghost in the machine.

The project inspired me to learn more about the mathematics that underlies machine learning. The desire to share the beauty of some of this math led to Why Machines Learn.

2 It’s All (Mostly) Vectors.

One of the most amazing things I learned about machine learning is that everything and anything—be it positions of planets, an image of a cat, the audio recording of a bird call—can be turned into a vector.

In machine learning models, vectors are used to represent both the input data and the output data. A vector is simply a sequence of numbers. Each number can be thought of as the distance from the origin along some axis of a coordinate system. For example, here’s one such sequence of three numbers: 5, 8, 13. So, 5 is five steps along the x-axis, 8 is eight steps along the y-axis and 13 is 13 steps along the z-axis. If you take these steps, you’ll reach a point in 3-D space, which represents the vector, expressed as the sequence of numbers in brackets, like this: [5 8 13].

Now, let’s say you want your algorithm to represent a grayscale image of a cat. Well, each pixel in that image is a number encoded using one byte or eight bits of information, so it has to be a number between zero and 255, where zero means black and 255 means white, and the numbers in-between represent varying shades of gray.

It was a visceral experience to witness Kepler’s ghost in the machine.

If it’s a 100×100 pixel image, then you have 10,000 pixels in total in the image. So if you line up the numerical values of each pixel in a row, voila, you have a vector representing the cat in 10,000-dimensional space. Each element of that vector represents the distance along one of 10,000 axes. A machine learning algorithm encodes the 100×100 image as a 10,000-dimensional vector. As far as the algorithm is concerned, the cat has become a point in this high-dimensional space.

Turning images into vectors and treating them as points in some mathematical space allows a machine learning algorithm to now proceed to learn about patterns that exist in the data, and then use what it’s learned to make predictions about new unseen data. Now, given a new unlabeled image, the algorithm simply checks where the associated vector, or the point formed by that image, falls in high-dimensional space and classifies it accordingly. What we have is one, very simple type of image recognition algorithm: one which learns, given a bunch of images annotated by humans as that of a cat or a dog, how to map those images into high-dimensional space and use that map to make decisions about new images.

3 Some Machine Learning Algorithms Can Be “Universal Function Approximators.”

One way to think about a machine learning algorithm is that it converts an input, x, into an output, y. The inputs and outputs can be a single number or a vector. Consider y=f (x). Here, x could be a 10,000-dimensional vector representing a cat or a dog, and y could be 0 for cat and 1 for dog, and it’s the machine learning algorithm’s job to find, given enough annotated training data, the best possible function, f, that converts x to y.

There are mathematical proofs that show that certain machine learning algorithms, such as deep neural networks, are “universal function approximators,” capable in principle of approximating any function, no matter how complex.

Voila, you have a vector representing the cat in 10,000-dimensional space.

A deep neural network has layers of artificial neurons, with an input layer, an output layer, and one or more so-called hidden layers, which are sandwiched between the input and output layers. There’s a mathematical result called universal approximation theorem that shows that given an arbitrarily large number of neurons, even a network with just one hidden layer can approximate any function, meaning: If a correlation exists in the data between the input and the desired output, then the neural network will be able to find a very good approximation of a function that implements this correlation.

This is a profound result, and one reason why deep neural networks are being trained to do more and more complex tasks, as long as we can provide them with enough pairs of input-output data and make the networks big enough.

So, whether it’s a function that takes an image and turns that into a 0 (for cat) and 1 (for dog), or a function that takes a string of words and converts that into an image for which those words serve as a caption, or potentially even a function that takes the snapshot of the road ahead and spits out instructions for a car to change lanes or come to a halt or some such maneuver, universal function approximators can in principle learn and implement such functions, given enough training data. The possibilities are endless, while keeping in mind that correlation does not equate to causation. ![]()

Lead image: Aree_S / Shutterstock

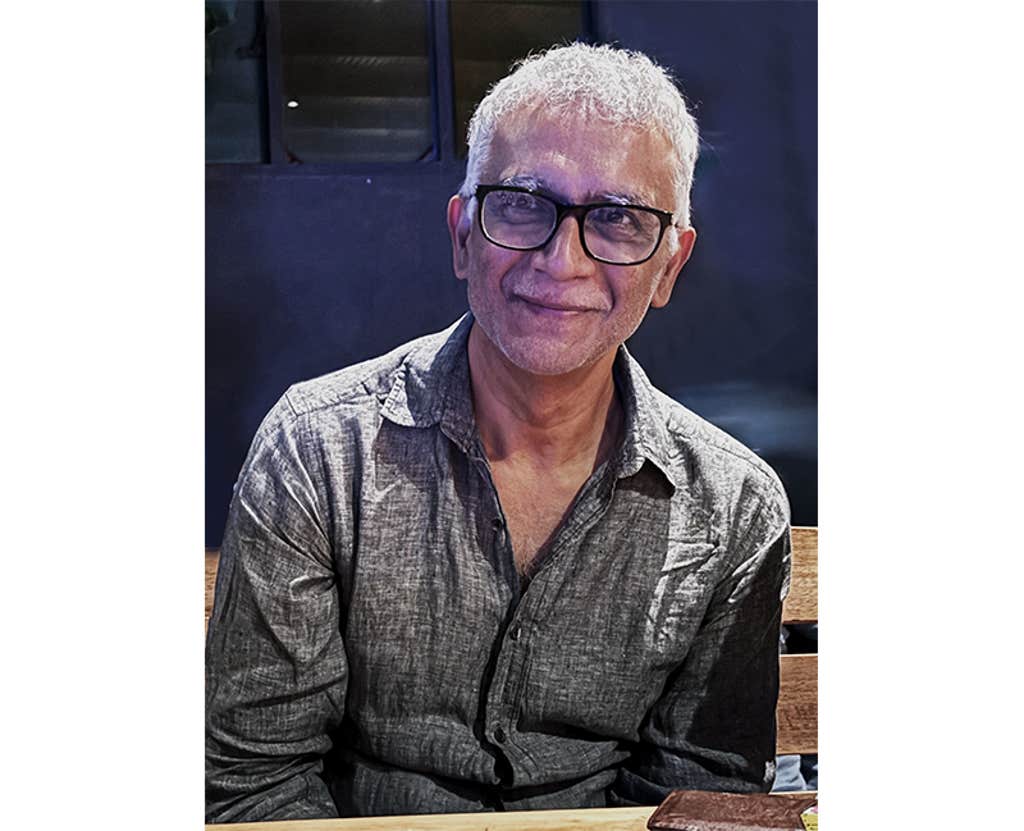

Anil Ananthaswamy

Posted on

Anil Ananthaswamy is a science journalist who writes about AI and machine learning, physics, and computational neuroscience. He’s a 2019-20 MIT Knight Science Journalism fellow. His latest book is Why Machines Learn: The Elegant Math Behind Modern AI.

Get the Nautilus newsletter

Cutting-edge science, unraveled by the very brightest living thinkers.

Note: This article have been indexed to our site. We do not claim legitimacy, ownership or copyright of any of the content above. To see the article at original source Click Here

![[CES 2022] Sony présente le nouveau prototype de sa voiture électrique thumbnail](https://www.industrie-techno.com/mediatheque/7/1/4/000058417_210x140_c.png)