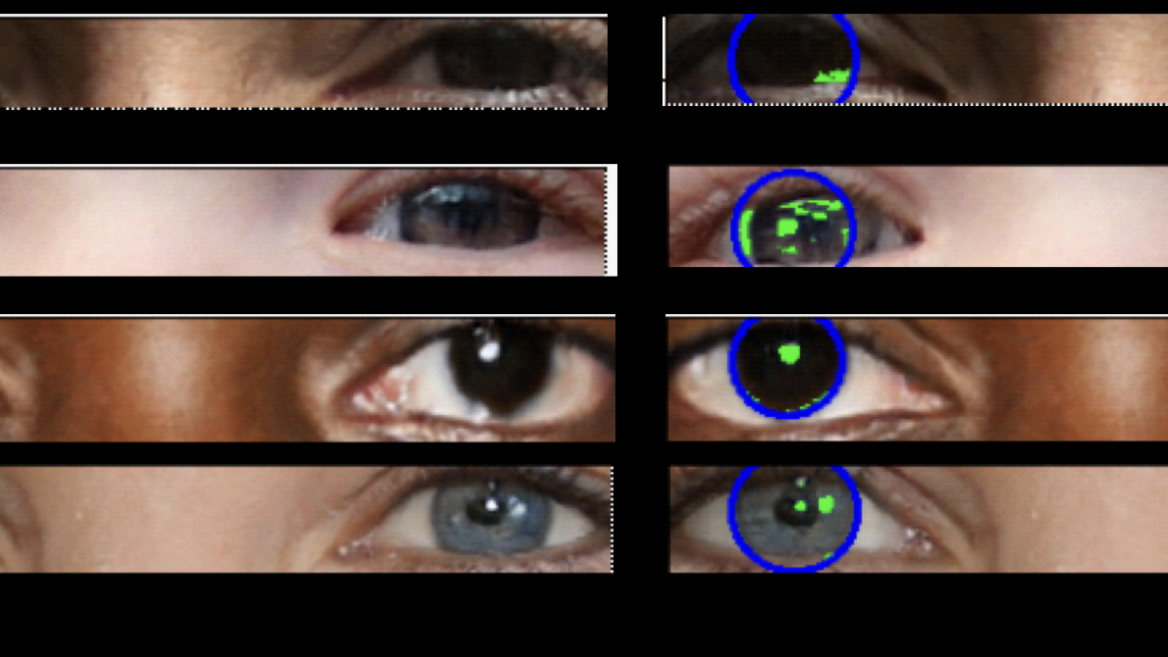

(Image credit: Adejumoke Owolabi)

The eyes, the old saying goes, are the window to the soul — but when it comes to deepfake images, they might be a window into unreality.

That’s according to new research conducted at the University of Hull in the U.K., which applied techniques typically used in observing distant galaxies to determine whether images of human faces were real or not. The idea was sparked when Kevin Pimbblet, a professor of astrophysics at the University, was studying facial imagery created by artificial intelligence (AI) art generators Midjourney and Stable Diffusion. He wondered whether he could use physics to determine which images were fake and which were real. “It dawned on me that the reflections in the eyes were the obvious thing to look at,” he told Space.com.

Deepfakes are either fake images or videos of people created by training AI on mountains of data. When generating images of a human face, the AI uses its vast knowledge to build an unreal face, pixel by pixel; these faces can be built from the ground up, or based on actual people. In the case of the latter, they’re often used for malicious reasons. Nevertheless, given that real photos contain reflections, the AI adds these in —– but there are often subtle differences across both eyes.

With a desire to follow his instinct, Pimbblet recruited Adejumoke Owolabi, a Masters student at the University, to help develop software that could quickly scan the eyes of subjects in various images to see whether those reflections checked out. The pair built a program to assess the differences between the left and right eyeballs in photos of people, real and unreal. The real faces came from a diverse dataset of 70,000 faces on Flickr, while the deepfakes were created by the AI underpinning the website This Person Does Not Exist, a website that generates realistic images of people who you would think exist, but do not.

Related: Apollo 11 ‘disaster’ video project highlights growing danger of deepfake tech

It’s obvious once you know it’s there: I refreshed This Person Does Not Exist five times and studied the reflections in the eyes. The faces were impressive. At a glance, there’s nothing that stood out to suggest they were fake.

Closer inspection revealed some near-imperceptible differences in the lighting of either eyeball. They didn’t exactly seem to match. In one case, the AI generated a man wearing glasses — the reflection in his lens also seemed a little off.

What my eye couldn’t quantify, however, was how different the reflections were. To make such an assessment, you’d need a tool that can identify violations to the precise rules of optics. This is where the software Pimbblet and Owolabi comes in. They used two techniques from the astronomy playbook, “CAS parameters” and “the Gini index.”

In astronomy, CAS parameters can determine the structure of a galaxy by examining the Concentration, Asymmetry and Smoothness (or “clumpiness”) of a light profile. For instance, an elliptical galaxy will have a high C value and low A and S values — its light is concentrated within its center, but it has a more diffuse shell, which makes it both smoother and more symmetrical. However, the pair found CAS wasn’t as useful for detecting deepfakes. Concentration works best with a single point of light, but reflections often appear as patches of light scattered across an eyeball. Asymmetry suffers from a similar problem — those patches make the reflection asymmetrical and Pimbblet said it was hard to get this measure “right”.

Using the Gini coefficient worked a lot better. This is a way to measure inequality across a spectrum of values. It can be used to calculate a range of results related to inequality, such as the distribution of wealth, life expectancy or, perhaps most commonly, income. In this case, Gini was applied to pixel inequality.

“Gini takes the whole pixel distribution, is able to see if the pixel values are similarly distributed between left and right, and is a robust non-parametric approach to take here,” Pimbblet said.

The work was presented at the Royal Astronomical Society meeting at the University of Hull on July 15, but is yet to be peer-reviewed and published. The pair are working to turn the study into a publication.

Pimbblet says the software is merely a proof of concept at this stage. The software still flags false positives and false negatives, with an error rate of about three in 10. It has also only been tested on a single AI model so far. “We have not tested against other models, but this would be an obvious next step,” Pimbblet says.

Dan Miller, a psychologist at James Cook University in Australia, said the findings from the study offer useful information, but cautioned it may not be especially relevant to improving human detection of deepfakes — at least not yet, because the method requires sophisticated mathematical modeling of light. However, he noted “the findings could inform the development of deepfake detection software.”

And software appears like it will be necessary, given how sophisticated the fakes are becoming. In a 2023 study, Miller assessed how well participants could spot a deepfake video, providing one group with a list of visual artifacts — like shadows or lighting — they should look for. But the research found that intervention didn’t work at all. Subjects were only able to spot the fakes as well as a control group who hadn’t been given the tips (this kind of suggests my personal mini-experiment above could be an outlier).

The entire field of AI feels like it has been moving at lightspeed since ChatGPT dropped in late 2022. Pimbblet suggests the pair’s approach would work with other AI image generators, but notes it’s also likely newer models will be able to “solve the physics lighting problem.”

This research also raises an interesting question: If AI can generate reflections that can be assessed with astronomy-based methods… could AI also be used to generate entire galaxies?

Pimbblet says there have been forays into that realm. He points to a study from 2017 which assessed how well “generative adversarial networks” or GANs (the technology underpinning AI generators like Midjourney or ChatGPT) could recapitulate galaxies from degraded data. Observing telescopes on Earth and in space can be limited by noise and background, causing blurring and loss of quality (Even stunning James Webb Space Telescope images require some cleaning up).

In the 2017 study, researchers trained a large AI model on images of galaxies, then used the model to try and recover degraded imagery. It wasn’t always perfect — but it was certainly possible to recover features of the galaxies from low-quality imagery.

A preprint study, in 2019, similarly used GANs to simulate entire galaxies.

The researchers suggest the work would be useful as huge amounts of data pour in from missions observing the universe. There’s no way to look through all of it, so we may need to turn to AI. Generating these galaxies with AI could then, in turn, train AI to hunt for specific kinds of actual galaxies in huge datasets. It all sounds a bit dystopian, but, then again, so does detecting unreal faces with subtle changes in the reflections in their eyeballs.

Join our Space Forums to keep talking space on the latest missions, night sky and more! And if you have a news tip, correction or comment, let us know at: community@space.com.

Note: This article have been indexed to our site. We do not claim legitimacy, ownership or copyright of any of the content above. To see the article at original source Click Here