computational complexity

By Nick Thieme

January 19, 2022

For years, intermediate measurements made it hard to quantify the complexity of quantum algorithms. New work establishes that those measurements aren’t necessary after all.

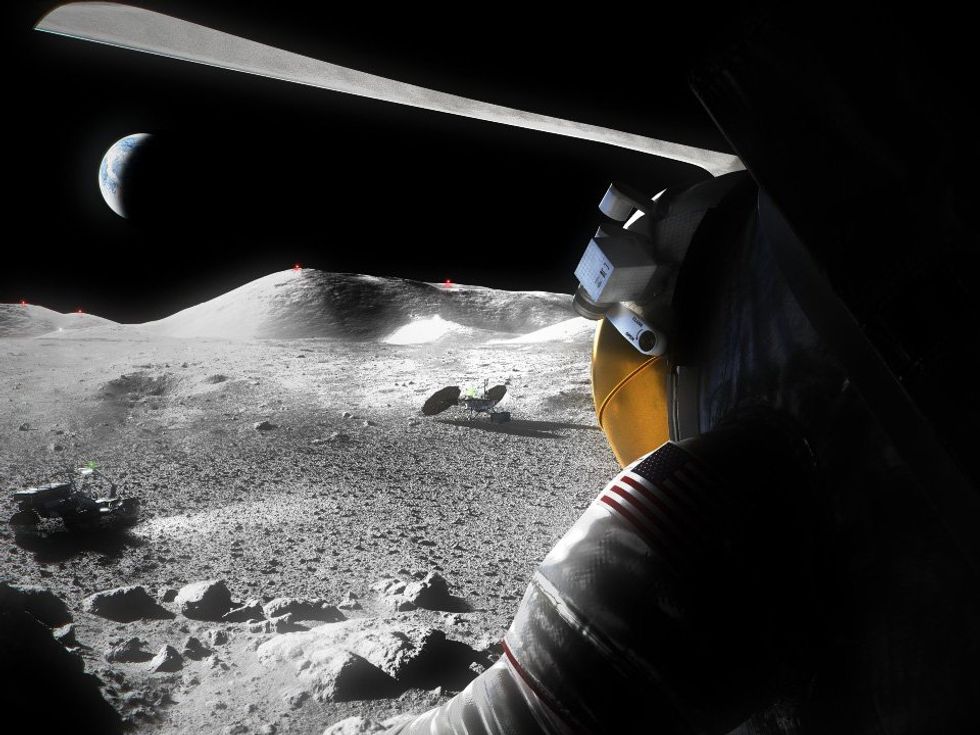

Samuel Velasco/Quanta Magazine

As quantum computers have become more functional, our understanding of them has remained muddled. Work by a pair of computer scientists has clarified part of the picture, providing insight into what can be computed with these futuristic machines.

“It’s a really nice result that has implications for quantum computation,” said John Watrous of the University of Waterloo in Ontario.

The research, posted in June 2020 by Bill Fefferman and Zachary Remscrim of the University of Chicago, proves that any quantum algorithm can be rearranged to move measurements performed in the middle of the calculation to the end of the process, without changing the final result or drastically increasing the amount of memory required to carry out the task. Previously, computer scientists thought that the timing of those measurements affected memory requirements, creating a bifurcated view of the complexity of quantum algorithms.

“This has been quite annoying,” said Fefferman. “We’ve had to talk about two complexity classes — one with intermediate measurements and one without.”

This issue applies exclusively to quantum computers due to the unique way they work. The basic difference between quantum computers and the computers we have at home is the way each stores information. Instead of encoding information in the 0s and 1s of typical bits, quantum computers encode information in higher-dimensional combinations of bits called qubits.

This approach enables denser information storage and sometimes faster calculations. But it also presents a problem. If at any point in a calculation you need to access the information contained in a qubit and you measure it, the qubit collapses from a delicate combination of simultaneously possible bits into a single definite one, possibly affecting all the other qubits in the system.

This can be a problem because virtually all algorithms require knowing the value of a computation as it’s in progress. For instance, an algorithm may contain a statement like “If the variable x is a number, multiply it by 10; if not, leave it alone.” Performing these steps would seem to require knowing what x is at that moment in the computation — a potential challenge for quantum computers, where measuring the state of a particle (to determine what x is) inherently changes it.

But 28 years ago, computer scientists proved it’s possible to avoid this kind of no-win situation. They established that for quantum algorithms, you can wait until the end of a computation to make intermediate measurements without changing the final result.

An essential part of that result showed that you can push intermediate measurements to the end of a computation without drastically increasing the total running time. These features of quantum algorithms — that measurements can be delayed without affecting the answer or the runtime — came to be called the principle of deferred measurement.

This principle fortifies quantum algorithms, but at a cost. Deferring measurements uses a great deal of extra memory space, essentially one extra qubit per deferred measurement. While one bit per measurement might take only a tiny toll on a classical computer with 4 trillion bits, it’s prohibitive given the limited number of qubits currently in the largest quantum computers.

Fefferman and Remscrim’s work resolves this issue in a surprising way. With an abstract proof, they show that subject to a few caveats, anything calculable with intermediate measurements can be calculated without them. Their proof offers a memory-efficient way to defer intermediate measurements — circumventing the memory problems that such measurements created.

“In the most standard scenario, you don’t need intermediate measurements,” Fefferman said.

Fefferman and Remscrim achieved their result by showing that a representative problem called well-conditioned matrix powering is, in a way, equivalent to a different kind of problem with important properties.

The well-conditioned matrix powering problem effectively asks you to find the values for particular entries in a type of matrix (an array of numbers), given some conditions. Fefferman and Remscrim proved that matrix powering is just as hard as any other quantum computing problem that allows for intermediate measurements. This set of problems is called BQL, and the team’s work meant that matrix powering could serve as a representative for all other problems in that class — so anything they proved about matrix powering would be true for all other problems involving intermediate measurements.

At this point, the researchers took advantage of some of their earlier work. In 2016, Fefferman and Cedric Lin proved that a related problem called well-conditioned matrix inversion was equivalent to the hardest problem in a very similar class of problems called BQUL. This class is like BQL’s little sibling. It’s identical to BQL, except that it comes with the requirement that every problem in the class must also be reversible.

In quantum computing, the distinction between reversible and irreversible measurements is essential. If a calculation measures a qubit, it collapses the state of the qubit, making the initial information impossible to recover. As a result, all measurements in quantum algorithms are innately irreversible.

That means that BQUL is not just the reversible version of BQL; it’s also BQL without any intermediate measurements (because intermediate measurements, like all quantum measurements, would be irreversible, violating the signal condition of the class). The 2016 work proved that matrix inversion is a prototypical quantum calculation without intermediate measurements — that is, a fully representative problem for BQUL.

The new paper builds on that by connecting the two, proving that well-conditioned matrix powering, which represents all problems with intermediate measurements, can be reduced to well-conditioned matrix inversion, which represents all problems that cannot feature intermediate measurements. In other words, any quantum computing problem with intermediate measurements can be reduced to a quantum computing problem without intermediate measurements.

This means that for quantum computers with limited memory, researchers no longer need to worry about intermediate measurements when classifying the memory needs of different types of quantum algorithms.

In 2020, a group of researchers at Princeton University — Ran Raz, Uma Girish and Wei Zhan — independently proved a slightly weaker but nearly identical result that they posted three days after Fefferman and Rimscrim’s work. Raz and Girish later extended the result, proving that intermediate measurements can be deferred in both a time-efficient and space-efficient way for a more limited class of computers.

Altogether, the recent work provides a much better understanding of how limited-memory quantum computation works. With this theoretical guarantee, researchers have a road map for translating their theory into applied algorithms. Quantum algorithms are now free, in a sense, to proceed without the prohibitive costs of deferred measurements.

Note: This article have been indexed to our site. We do not claim legitimacy, ownership or copyright of any of the content above. To see the article at original source Click Here